MYGITHUB-Lightweight-Speech-Recognition-Conversion-Model

This is My Github Project: Vosk-based-bilingual-speech-recognition-application

The text below is README.md file.

A Vosk-based Chinese-English bilingual speech recognition Python Application

😊Introduction

Vosk is an open source speech recognition toolk. It focused on providing efficient and accurate speech-to-text functionality. It is based on Kaldi (another famous speech recognition toolkit), but compared to Kaldi, Vosk is more lightweight and easy to use, and is suitable for embedding into various applications.

This application is developed on the basis of vosk, implementing real-time bilingual speech-to-text function.

All the installation and usage are demonstrated under Win 11 Systems.

🚀Installation

Requirements

You need to prepare these stuffs in advance:

- 🐍Available Python Environments (Python >= 3.6)

- WE RECOMMEND Python 3.9 !

- 🪟Windows Operating Systems

- Maybe in MacOS works. (I don’t have a Macbook. 🤑)

- 🎙️Available Micro devices.

We strongly recommend you to install Anaconda or Miniconda to create a virtual environment to run the python code.

Clone the project

Clone the remote repository from Git to obtain the Python source code.

1 | |

Open the folder as the current directory.

1 | |

Create Python environments

🥳If you have installed Anaconda…

Great! Now run the following commands in the command line:

1 | |

This would create a new Conda environment named “translate”. You can also customize your own environment name!

After that, switch to activate the new environment to install the required packages.

1 | |

Then install the required packages.

1 | |

You can also install packages manually:

1 | |

🙂↕️If not?

Then go to install Anaconda first!😋

Just a joke. Anaconda is too huge for its size. You can still install these packages using:

1 | |

Required packages

Required packages are as follows:

tkinter: This is the standard GUI toolkit for Python, allowing developers to create graphical user interfaces easily. (Standard Library)

threading: A standard library module that provides a way to create and manage threads, enabling concurrent execution of code. (Standard Library)

queue: This standard library module provides a thread-safe FIFO implementation, useful for managing tasks between threads. (Standard Library)

time: A standard library module that provides various time-related functions, including time manipulation and formatting. (Standard Library)

json: This standard library module is used for parsing JSON data and converting Python objects to JSON format. (Standard Library)

sounddevice: A third-party library that allows for audio input and output using NumPy arrays, facilitating sound processing tasks. (Requires manual installation)

numpy: A widely-used third-party library for numerical computations in Python, providing support for large, multi-dimensional arrays and matrices. (Requires manual installation)

vosk: A third-party library for speech recognition that provides models for various languages using Kaldi’s speech recognition capabilities. (Requires manual installation)

os: A standard library module that provides a way to interact with the operating system, including file and directory management functions. (Standard Library)

platform: This standard library module allows access to underlying platform’s identifying data, such as OS type and version. (Standard Library)

💓Usage

Make sure you have passed through the installation section successfully.

Create a folder to store results

Firstly, create a folder named “results” in the current directory.

- For Bash

1 | |

- For Powershell

1 | |

Download and Unzip the vosk-model

For the core part of the translation model, we use Vosk-model, which is both lightweight and powerful.

For Bash and Powershell both:

1 | |

Or you can just download the zip file online and drag the zip-file into the current folder.

Then extract the downloaded ZIP file:

1 | |

(Optional) After the unzip process, you can delete the zip file, it’s too huge!

After all these requirements, your current directory should be like this:

1 | |

Check your current directory or simply using tree command for WSL.

Run Python code

You should run the python code using the conda environment you have created accordingly!

Switch to the main folder and run main.py:

1 | |

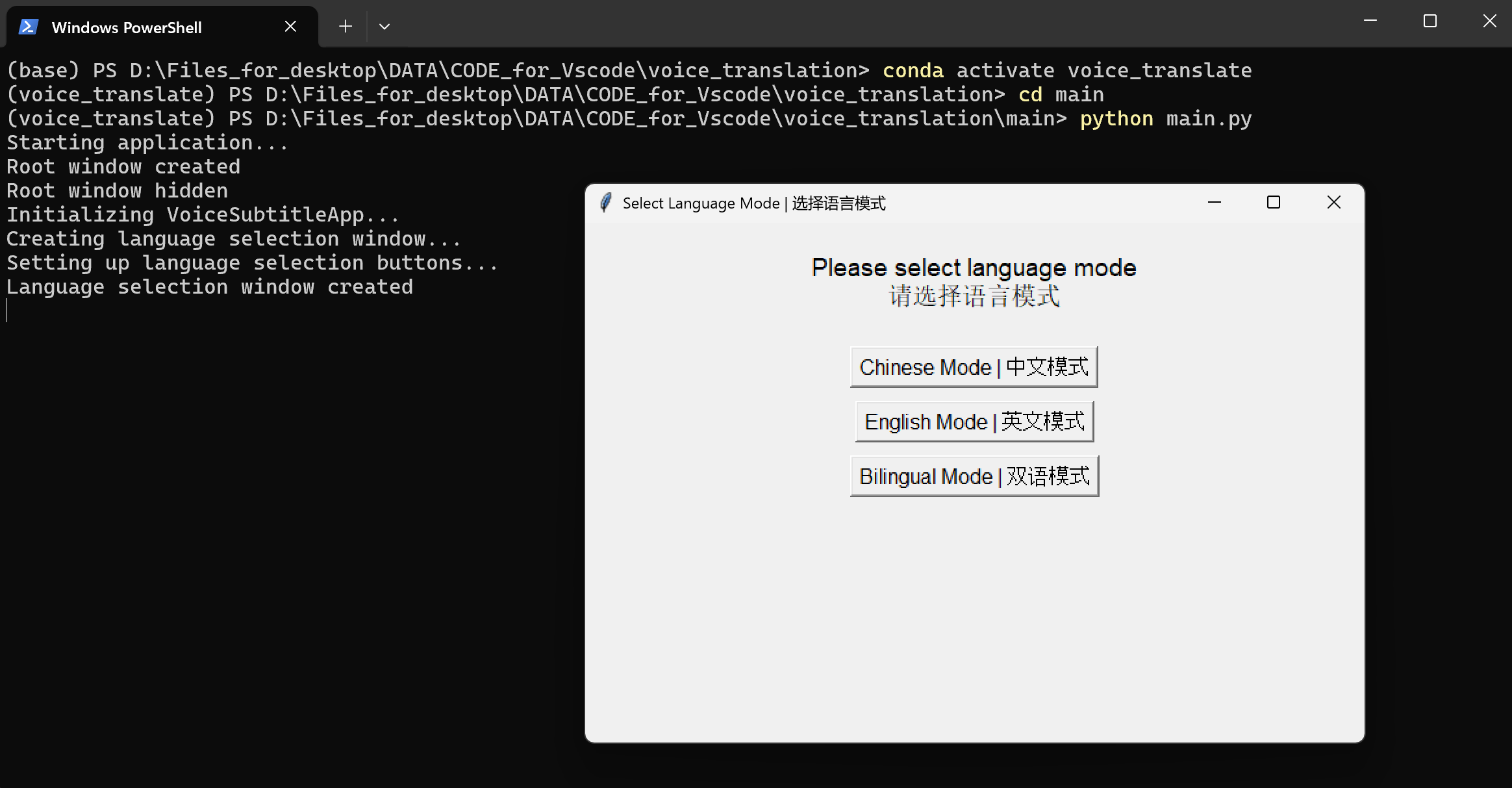

You will probably see the following pop-up window as follows.

We have three modes to choose from, including “Chinese Mode”, “English Mode” and “Bilingual Mode”.

Choose the mode you like and then the translation will begin!

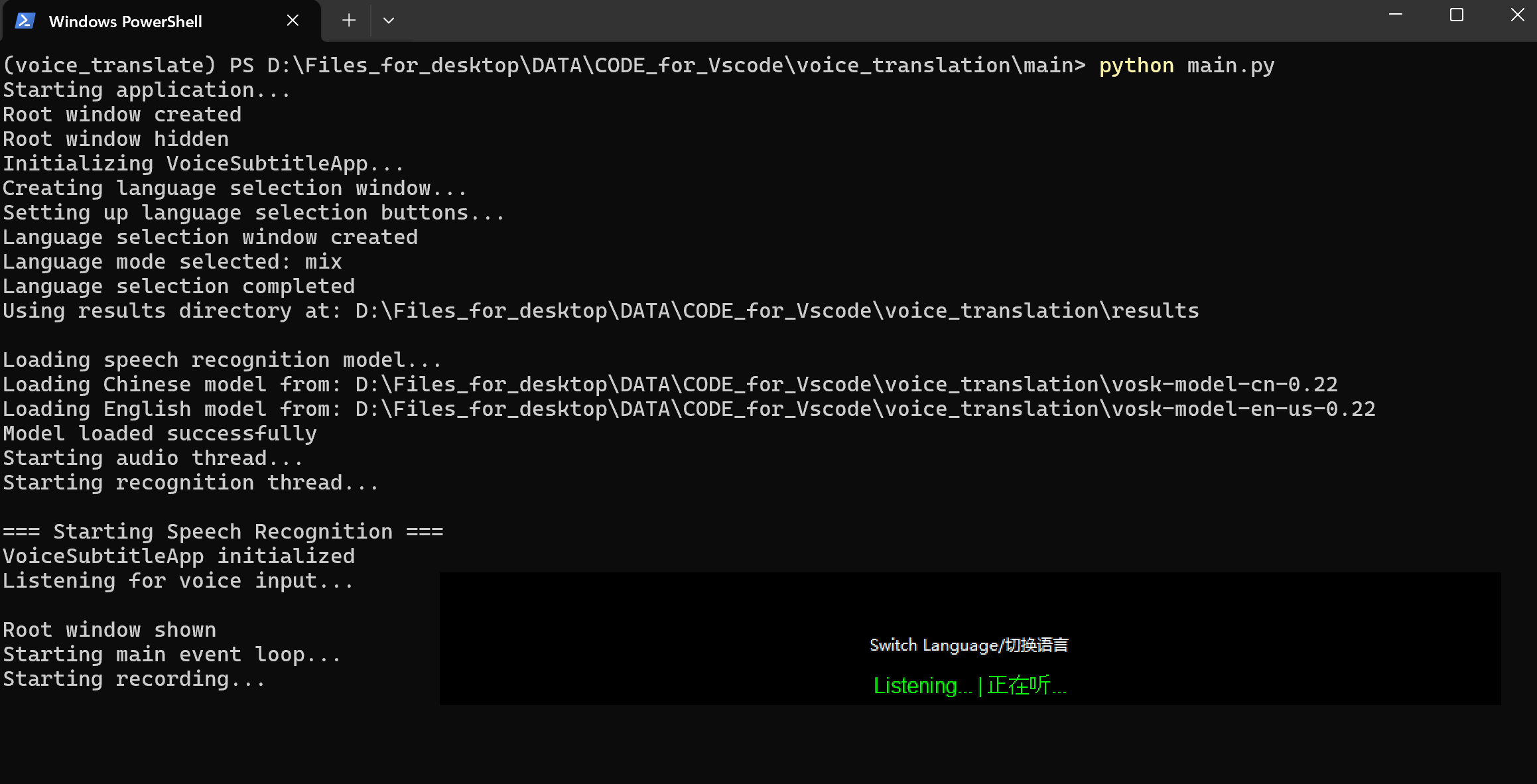

Warning: It may take approximately 10~20 seconds for the code to load the Vosk-model, for the Bilingual one, it may even take longer.😭Sorry about that.

After the long wait, you will see a green-colored information said “Model loaded successfully!” That means you can begin your words now!

- The results of the conversion will be displayed in real-time in the terminal.

- To end the conversion process, you can right-click your mouse, which will terminate the program.

- For the bilingual model, you can click on

Select Languagein the middle to switch languages.

Obtain transcriptions

You can get the transcriptions in the results folder, including the .txt file and .md file.

🤖Discussion

Advantages

- If you want to get the voice transcriptions for free instead of paying for professional apps. This one is suitable for you!

- You can modify the source code to customize your own GUI interface or implement some advanced functions on your own!

- I think it’s a great chance to learn Python, isn’t it?

Disadvantages FUTURE OUTLOOK

- WE WILL create a more lightweight version.

- WE WILL implement the speech-to-text conversion for mixed input of Chinese and English.

- WE WILL build a more aesthetically pleasing GUI.

- WE WILL optimize the transcriptions, including automatically add punctuation, segment text, and organize it into a coherent article.

👍Advertisement

My personal Blog: Xiyuan Yang’s Blog